UTCTF 2019 - FaceSafe (1400pt)

ML Crafted Input

March 10, 2019

machine learning

FaceSafe (1400pt)

Exploit

Description: Can you get the secret? http://facesafe.xyz

Like any startup nowadays, FaceSafe had to get on the MACHINELEARNING™ train. Also, like any other startup, they may have been too careless about exposing their website metadata…

Hint: MACHINELEARNING™ logic: if it looks like noise, swims like noise, and quacks like noise, then it’s probably… a deer?

Solution:

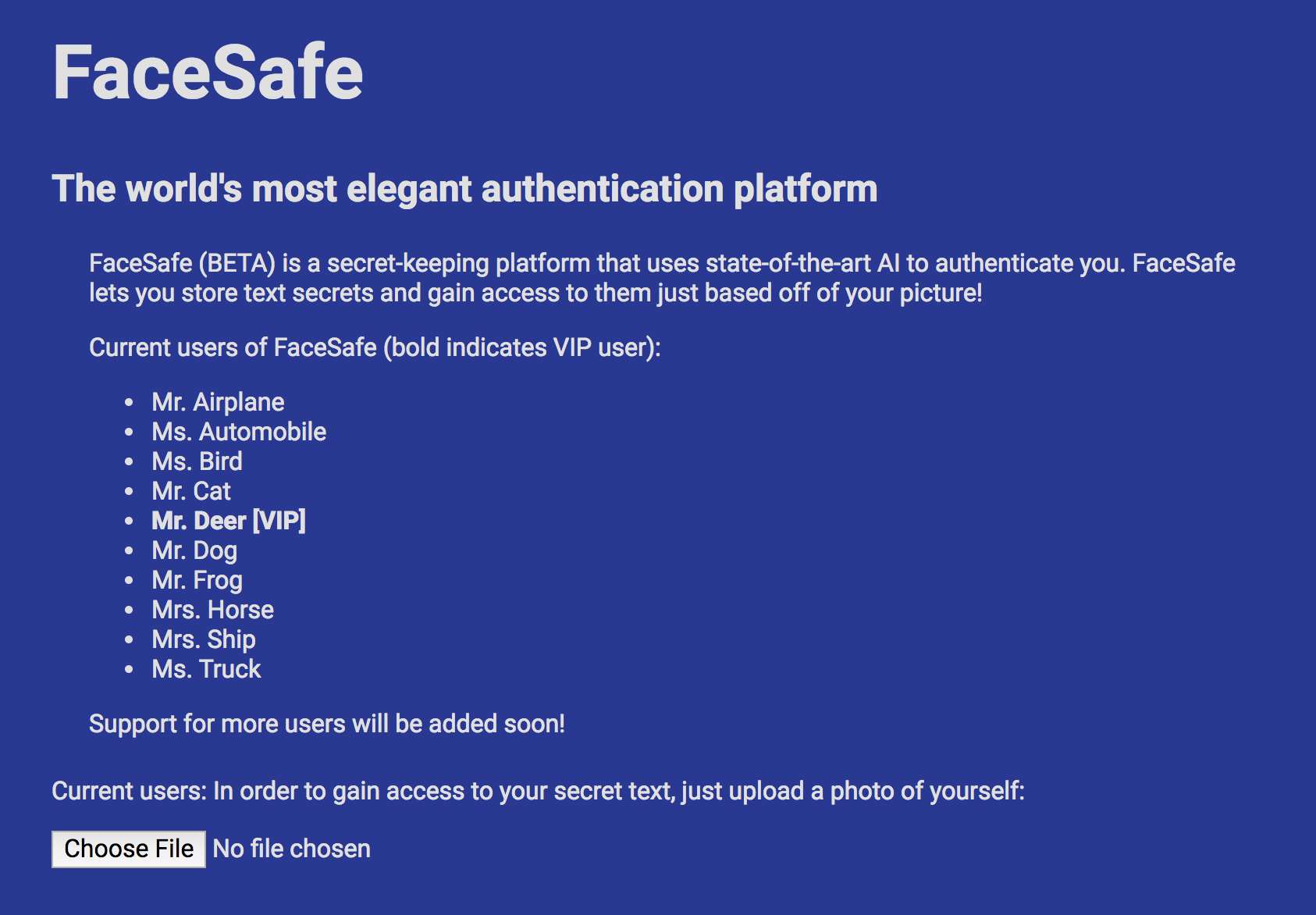

Navigating to http://facesafe.xyz takes us to this page:

The site allows us to upload a 32x32 pixel image. Then it performs some sort of identification to determine which user we are. Finally, if it determines us to be “Mr. Deer” it will hopefully give us the flag.

Someone else on my team found that the robots.txt contained the following listings:

User-agent: *

Disallow: /api/model/auth

Disallow: /api/model/check

Disallow: /api/model/expose

Disallow: /api/model/infer

Disallow: /api/model/model_metadata.json

Disallow: /api/model/model.model

Disallow: /static/event.png

Disallow: /static/find.png

Disallow: /static/bad.png

So this is clearly a machine learning challenge. We can access /api/model/model.model to download the model. It is the type of saved model generated by Keras (a common python ML frontend).

Once we have it downloaded, we can load it:

from keras.models import load_model

m = load_model('./model.model')

Then we can view the structure:

m.summary()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 32, 32, 32) 896

_________________________________________________________________

activation_1 (Activation) (None, 32, 32, 32) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 30, 30, 32) 9248

_________________________________________________________________

activation_2 (Activation) (None, 30, 30, 32) 0

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 15, 15, 32) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 15, 15, 64) 18496

_________________________________________________________________

activation_3 (Activation) (None, 15, 15, 64) 0

_________________________________________________________________

conv2d_4 (Conv2D) (None, 13, 13, 64) 36928

_________________________________________________________________

activation_4 (Activation) (None, 13, 13, 64) 0

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 6, 6, 64) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 2304) 0

_________________________________________________________________

dense_1 (Dense) (None, 512) 1180160

_________________________________________________________________

activation_5 (Activation) (None, 512) 0

_________________________________________________________________

dense_2 (Dense) (None, 10) 5130

_________________________________________________________________

predictions (Activation) (None, 10) 0

=================================================================

Total params: 1,250,858

Trainable params: 1,250,858

Non-trainable params: 0

_________________________________________________________________

So we have several convolution/maxpooling layers followed by a two fully connected (dense) layers. Presumably the final layer is a softmax output that generates probabilites of image classes.

(I’m not going to explain the actual model much more, this is a very common example of a deep convolutional neural network for image classification. Googling any of those terms will lead you to more in-depth resources)

Our goal is to generate an crafted input for this network such that it predicts index 4 with a high probability (corresponding to Mr. Deer).

I adapted the technique presented here to generate this crafted example.

The generation script essentially starts with a random vector. We define the cost as the probability of predicting class 4. Then we take the gradient of the cost and add it to our original vector such that the next time we run the image through the network, it is more likely to pick 4.

This is kind of like standard gradient descent training on a network instead this time, our network weights are fixed and we are updating the image itself.

After letting the following script run for a few minutes, we obtain an image that can fool the network:

# intially random vector

crafted_input = np.random.rand(1,32,32,3)

# learning rate

lr = 0.2

m_in = m.layers[0].input

m_out = m.layers[-1].output

# probability of predicting class 4

cost = m_out[0,4]

# calculate the gradient through our model

grad = K.gradients(cost, m_in)[0]

# function to calculate current cost and gradient

step = K.function([m_in, K.learning_phase()], [cost, grad])

# current prediction

p = 0.0

i = 0

while p < 0.50:

i += 1

p, gradients = step([crafted_input, 0])

crafted_input += gradients * lr

if i % 1000 == 0:

print(p)

print('done!')