pwn2win 2021 - Highest Power (500pt / 1 solve)

JIT VM Reversing

May 30, 2021

rev

jit

vm

Highest Power

Description:

Laura is very curious and ended up discovering that the leaders of the city-state of Rhiza share a secret. This secret is only revealed when they are all gathered. Due to the carelessness of one of them, Laura was able to intercept the message with the program to be used at the time of the disclosure and extract it. But, despite all her efforts, Laura was unable to reveal the shared secret. Therefore, she needs help to understand how the program works and finally find out what this secret is!

NOTE: The binary was built using the arm toolchain from the Ubuntu 16.04 official repos.

NOTE: Not all hosts it tries to connect to should be online. Please try all possible hierarchies.

Files:

- highest-power (provided binary)

- merged.bin (extracted JIT’ed functions at 0x10000 byte offsets)

- rep.py (reproduced behavior in python)

- rep.c (reproduced behavior in C)

Intro

I competed with DiceGang for pwn2win 2021 and we ended up getting first place and qualifying for DEFCON CTF. After just narrowly missing 1st place at PlaidCTF due to some flag hoarding tactics by Perfect Blue, we were extra cautious and used a similar strategy here. We saved most of our flags until the very end, to the surprise of most people including uuunderflow who were leading for most of the competition.

I think most of us would have preferred to submit flags on-schedule as we solved challenges. However, flag hoarding is unfortunately an effective strategy if the top team gets lulled into a false sense of security as s1r1u5 (playing on uuunderflow) points out: https://twitter.com/S1r1u5_/status/1399041957876289537

In most CTFs, flag hoarding can be dangerous because you are never 100% certain about some flags, often you need to submit to the scoreboard to ensure you have the right flag. However, pwn2win used the NIZKCTF platform which allowed us to verify all of our flags offline.

Detecting (and hence banning) flag hoarding is pretty hard. In some cases, certain teams have a reputation of flag hoarding which makes it predictable (you know who you are). In our case, I don’t think anyone expected it. However, the first two real flags we submitted were 1st blood on the Botnet challenge which ended up getting 4 solves and 2nd blood on the Apple II challenge which ended up getting 11 solves. Considering we solved these challenges and not the “easier” ones, this might have clued people in to the fact we were playing competitively.

Overview

Now let’s talk about the actual challenge:

Highest Power was a reversing problem which ended up only getting solved by us. This was definitely a collaborative effort. We had about 1-3 people working on this continuously for ~20 hours (see the timeline at the end for details).

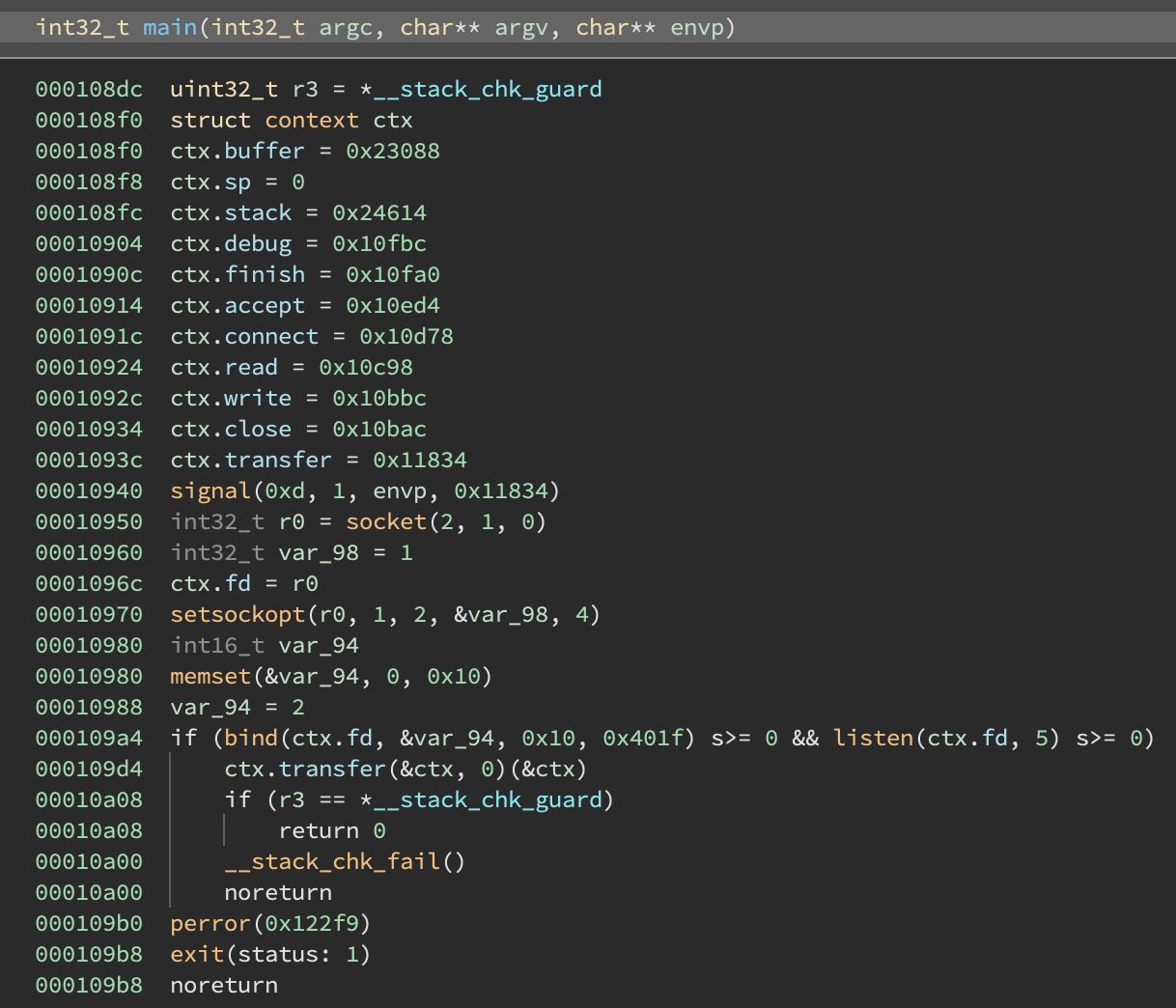

The main function accepts an incoming connection and sets up some function pointers in a context struct:

The context struct looks like this for reference:

struct context {

uint32_t mem;

uint32_t sp;

uint32_t stack[0x10];

int32_t* unk;

int32_t fd;

void (* debug)(struct context *);

void (* finish)(struct context *);

void (* accept)(struct context *);

void (* connect)(struct context *);

void (* read)(struct context *);

void (* write)(struct context *);

void (* close)(struct context *);

void (* transfer)(struct context *, int);

};

It consists of several handler functions (debug, finish, accept,…) a stack + stack pointer, and a memory segment (mem). This context struct acts as a VM context as we will see later.

1: JIT’ing

After receiving a connection, main calls ctx.transfer(&ctx, 0) which initiates a just-in-time compilation event, generating ARM code on-the-fly and then allocating an executable memory segment which it jumps to. The second parameter to transfer specifies the function index and can be in the range [0,39] inclusive.

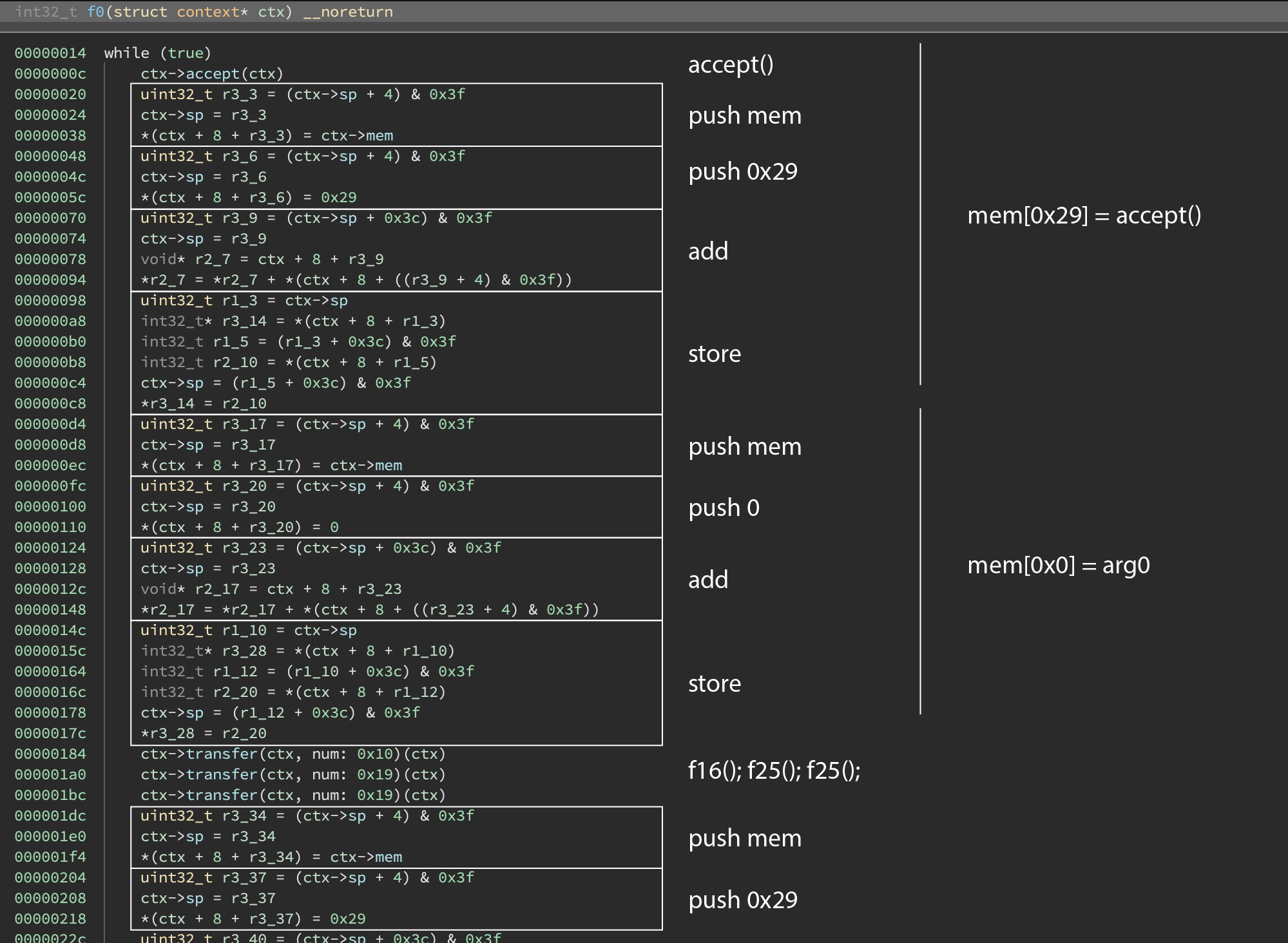

The JIT’ed code is fairly regular and after staring at it, we can identify some patterns and pull out the VM instructions:

We dumped all 40 of the functions into a large binary to analyze further (see merged.bin).

During the competition, we set up a HackMD and manually disassembled all 40 of the functions (see a copy here) and then tried to generate some more readable high-level code and figure out what was going on.

2: What does it do?

The main function is f0 which does the following:

f0_start:

base[0x29] = accept()

base[0] = ?

f16_check_req_path() ; "GET /gather_everyone_to_reveal_our_secret"

f25_cmp_0()

f25_cmp_0()

f11_wait_for_newlines(base[0x29])

f10_is_local()

and

dup

if nz:

f1_good_req()

f25_cmp_0()

if nz:

f2_bad_req()

Essentially it waits for a new connection on port 8000 and then it checks if the input string is GET /gather_everyone_to_reveal_our_secret (f16) and then verifies that the requester ip address starts with 10 (f10). If both these things are true, it executes f1:

f1_good_req:

f19_ok() ; "HTTP/1.0 200 OK\r\n\r\n"

f26_init_things() ; initialize some variables

f8_format_ip()

ip = f9()

f5_talk_to_peer(ip)

drop

ip = f9()

f5_talk_to_peer(ip)

drop

f3()

close(base[0x29])

finish()

This function returns HTTP/1.0 200 OK\r\n\r\n and then tries to communicate with two peer servers (f5) before finally executing f3 which does some crypto mumbo jumbo and prints out some random looking characters.

If f0 didn’t like the input or it was a non 10.x.x.x IP address, it executes f2 instead which prints HTTP/1.0 403 Forbidden\r\n\r\n.

f2_bad_req:

f18_bad() "HTTP/1.0 403 Forbidden\r\n\r\n"

close(base[0x29])

In f16, the GET request can optionally specify an argument from 0 to 2 inclusive like: GET /gather_everyone_to_reveal_our_secret?1 If this is omitted, it defaults to 2.

3: Peer communication

The peer communication routine does the following:

f5_talk_to_peer(ip):

; ------------

; target_fd @ 0x130

; ------------

if [base+0x12d] == 0: return

target_fd = connect(ip, 8000)

if target_fd < 0: return

f17_make_request()

f11_wait_for_newlines(target_fd)

f6_read_body()

close(target_fd)

f17_make_request:

write "GET /gather_everyone_to_reveal_our_secret?"

push 0x30

push base

push 0x12d

add

load

add ; [base+0x12d]+0x30

push 1

sub ; [base+0x12d]+0x30-1

push base

push 0x130

add

load

write

write " HTTP/1.0\r\n\r\n"

f6_read_body: 7

; reads 32 bytes from socket, xors with base[8], base[9], ..., base[8+32]

push 0

f7()

pop

Specifically, it constructs a new HTTP GET request that invokes GET /gather_everyone_to_reveal_our_secret?x where x is the current parameter minus 1. If x is 0, f5 just exits. After receiving the result, it invokes f6 which uses the 32 byte output to xor a stored key (at offset 8 in the VM context).

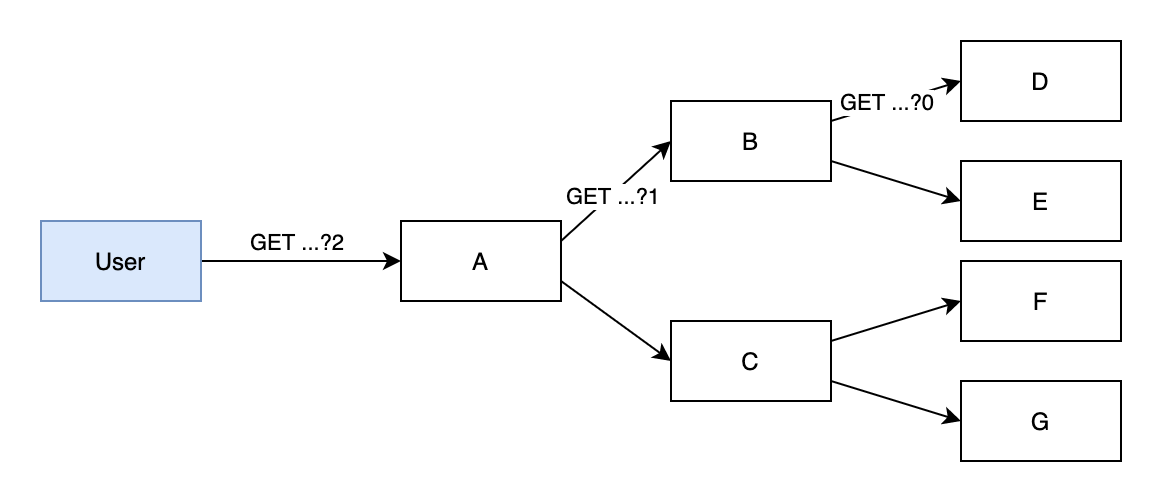

The peer selection process seemed to be deterministic based on the value of the requester ip and it always produced an IP address in the 10.x.x.x range.

Our communication diagram now looks like this:

4: Local IP?

At this point we were a little confused about the IP filter. The 10.x.x.x IP block is allocated for local ip addresses and if there was a challenge server running that expected a local ip, we would somehow need to get on the same network first.

We briefly tried using an RCE on a different challenge to ping some servers in the 10.x.x.x range with the idea that if the pwn2win authors had created a server running this challenge it would be in the same cluster. We didn’t get any responses and then we started rethinking the process.

The interesting thing was that the output of the server appeared to be based on the requester IP address and the output values of the two peer servers. So our pseudo-code for computing the 32 byte outpus looks something like:

def output(ip):

out = ...

o1 = talk_to_peer(get_peer(ip))

mutate(out, o1)

o2 = talk_to_peer(get_peer(ip))

mutate(out, o2)

mutate(out)

return out

We would somehow need to brute force all possible requester ip’s to try every possible tree of values in the hopes that one combination would produce the flag.

After re-reading the challenge description, we saw the following: Not all hosts it tries to connect to should be online. Please try all possible hierarchies.

So if some hosts are offline, our model looks more like:

def output(ip):

out = ...

try:

o1 = talk_to_peer(get_peer(ip))

mutate(out, o1)

except:

pass

try:

o2 = talk_to_peer(get_peer(ip))

mutate(out, o2)

except:

pass

mutate(out)

return out

We noticed that if both peers were offline, the output buffer would be the same as if we invoked with the argument 0 (indicating a leaf node).

5. Solution

At this point we understood that we needed to sample all possible initial requester IP’s and all possible tree hierarchies. So the next step was to implement this solution.

First we tried simply using a gdb script to hook and modify the requester IP during execution. For example the following sets the IP to 10.18.52.86:

set arch arm

target remote :1234

b *0x00011dd8

c

set $r0 = 0x0a123456

This proved to be very slow, mainly due to the fact that this was running inside QEMU (due to being an ARM executable).

Next we re-implemented the program logic in Python and this was much faster but it was expected to take several hours to finish the search and that would be close to the end of the competition so we decided to optimize further.

To maximize the chances of succeeding, three of us decided to re-implement in separate languages. I (hgarrereyn) rewrote in C, defund in C++ and Aplet123 in Rust.

Around this time Aplet123 also wrote a really cool JIT-disassmbler in Python to ensure we didn’t screw up any of the emulation steps.

Eventually we got the C version working and the brute force was now feasible. The solution flow essentially works like this:

- Enumerate all 10.x.x.x addresses and store the two computed peer addresses and the default 32 byte output buffer (for arg 0)

- Enumerate all 10.x.x.x addresses and compute 3 output buffer variants (just peer A, just peer B, both peers A&B).

- Enumerate all 10.x.x.x addresses and compute 16 output buffer variants: (peer A buffer is selected from one of the previous 4 and peer B buffer is selected from one of the previous 4).

We implemented poor-man’s multiparallelism (coloquially referred to as DiceGrid™) by having multiple people in the Discord compile and rerun each variant of the 3rd phase, tracking progress in Google sheets.

See rep.c for our C implementation of this problem.

Eventually we found the flag in the (1,2) variant: CTF-BR{j1t_b4s3d_vms_4r3_c00l!!}

Timeline

(all times in central time)

May 29th (second day of ctf)

- 12:00 PM: Defund and Aplet123 start working on the problem.

- 1:00 PM: Defund figures out VM context struct format.

- 1:10 PM: Aplet123 identifies the push and pop JIT wrapper functions and figures out the stack format.

- 3:00 PM: Defund and Aplet123 figure out that the

transferfunction is generating executable code. - 3:15 PM: hgarrereyn joins to help figure out how to run in qemu (there are some shared library annoyances)

- 3:45 PM: qemu is set up and gdb is working

- 4:00 PM: hgarrereyn confirms

transferfunction returns pointer to assembly-looking things - 4:15 PM: Defund begins dumping the transfer functions.

- 5:40 PM: Defund finishes dumping all 40 JIT functions and starts annotating the assembly.

- …: People are afk for dinner

- 7:30 PM: Defund figures out function call hierarchies.

- 9:00 PM: Defund and Aplet123 more reversing, identify the

HTTP/1.0 200 OK\r\n\r\nresponse. - 9:10 PM: hgarrereyn returns from afk, gets tldr from Defund

- 9:30 PM: setup shared hackmd to work on manual disassembling

- 10:40 PM: hgarrereyn identifies the

GET /gather_everyone_to_reveal_our_secretfunction - 11:50 PM: Defund and hgarrereyn finish the manual disassembly and start working on high-level understanding.

May 30th

- 12:30 AM: hgarrereyn figures out swap function (f39)

- 12:50 AM: Defund figures out string debug function (f22)

- 1:00 AM: Defund figures out buffer initialization (f20)

- 1:30 AM: hgarrereyn figures out peer connection function (f5) and the HTTP GET request.

- 1:34 AM: ginkoid ensures the ctf problem is following spec

- 1:40 AM: hgarrereyn figures out decrementing parameter thing

- 1:50 AM: hgarrereyn, defund and Rob start trying to figure out how to make a valid connection to the problem. Ginkoid starts enumerating GCP IPs int the hopes we can do a network scan.

- 2:30 AM: hgarrereyn figures out it filters non 10.x.x.x ip addresses.

- 2:40 AM: hgarrereyn makes valid request after forging ip in gdb.

- 2:50 AM: Infuzion joins and uses home network to make valid requests and provided target ip’s using strace.

- 3:00 AM: Infuzion figures out the parameter is constrained between 0-2.

- 3:10 AM: Ginkoid finishes scanning GCP us-central1 with no responses. We have a brief discussion about using an RCE from a different challenge to try to ping local addresses.

- 3:20 AM: Ginkoid uses our solution for Ruthless Monster to get RCE in the same GCP network but nothing seems to respond.

- 3:25 AM: Infuzion figures out the output data depends on the requester’s IP address.

- 3:40 AM: We realize we need to brute force all possible requester IPs.

- 3:50 AM: hgarrereyn briefly tries a gdb script but it’s too slow.

- 4:00 AM: hgarrereyn and Defund start trying to reproduce in Python.

- 4:30 AM: Initial requester IP mutation part done.

- 5:00 AM: hgarrereyn dumps a trace of the VM buffer at each function call to assist in debugging.

- 6:15 AM: hgarrereyn gets Python re-implementation to match the binary.

- 6:30 AM: hgarrereyn sets up multiprocessing and starts running on a chonky 128-core machine.

- 7:00 AM: We realize Python is going to take too long so we start reimplementing in a faster language. hgarrereyn in C, Defund in C++, Aplet123 in Rust

- 7:30 AM: hgarrereyn finishes C re-implementation, even running in a single thread is faster than 128-core python.

- 8:00 AM: C impl finishes phase 1 and 2.

- 8:15 AM: We “multiprocess” phase 3 by distributing the code to several people in Discord and tracking progress with Google sheets.

- 8:30 AM: Meow finds the flag in variant (1,2)!