CONFidence CTF 2019 Teaser - Neuralflag (188pt)

ML Crafted Input

March 17, 2019

machine learning

Neuralflag (188pt)

Misc

Description: My neural network accepts only one secret image! I think this is the best method for authentication. There is no source code to reverse engineer, seems uncrackable to me.

Can you hack into inner workings of neural networks?

Files:

Solution:

We are provided with a tar file containing a python training script and a saved keras model.

We can load and examine the model:

from keras.models import load_model

m = load_model('./model2.h5')

m.summary()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

flatten_1 (Flatten) (None, 550) 0

_________________________________________________________________

dense_1 (Dense) (None, 550) 303050

_________________________________________________________________

dense_2 (Dense) (None, 550) 303050

_________________________________________________________________

dense_3 (Dense) (None, 2) 1102

=================================================================

Total params: 607,202

Trainable params: 607,202

Non-trainable params: 0

This model takes an 11x50 matrix as input and passes it through three dense (fully connected) layers. The final layer has only two nodes and uses a softmax layer to obtain probabilities. From reading the training script, it is clear that out[1] corresponds to p(flag). (and therefore out[0] is simply p(!flag)).

Additionally, since the network was only trained on a single positive example, it likely did not learn a general representation of “flag images” but rather memorized that single example. If we can find an input x such that model(x)[1] is high, our input is likely very similar to the original flag example.

In order to generate this input, we can start with a random 11x50 matrix and determine the gradient of the second output with respect to our input image. Then we nudge our input image in the direction of the graident (to maximize the second output) and repeat.

However, for most random examples, the network predicts p(!flag) with very high probability and since the final layer is a softmax layer, our gradient will be very close to zero and won’t give us much information.

Therefore, before this step, we need to switch to a linear activation so that we can obtain a useful gradient:

# change output layer to linear

m.get_layer('dense_3').activation = keras.activations.linear

# save and reload model to apply change

m.save('./model_linear.h5')

m = load_model('./model_linear.h5')

Now we can use the following script to perform this calculation:

import keras.backend as K

import numpy as np

# intially random vector

crafted_input = np.random.rand(1,11,50)

# learning rate

lr = 0.2

m_in = m.input

m_out = m.output

# probability of predicting class 4

cost = m_out[0,1]

# calculate the gradient through our model

grad = K.gradients(cost, m_in)[0]

# function to calculate current cost and gradient

step = K.function([m_in, K.learning_phase()], [cost, grad])

# fetch gradient and apply

p, gradients = step([crafted_input, 0])

crafted_input += gradients * lr

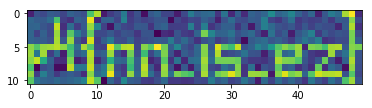

We obtain the following image: